Scalable Real-Time Recurrent Learning Using Columnar-Constructive Networks

Updated: 2023-08-31 16:10:46

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Scalable Real-Time Recurrent Learning Using Columnar-Constructive Networks Khurram Javed , Haseeb Shah , Richard S . Sutton , Martha White 24(256 1 34, 2023. Abstract Constructing states from sequences of observations is an important component of reinforcement learning agents . One solution for state construction is to use recurrent neural networks . Back-propagation through time BPTT and real-time recurrent learning RTRL are two popular gradient-based methods for recurrent learning . BPTT requires complete trajectories of observations before it can compute the gradients and is unsuitable for online

, Membership Courses Tutorials Projects Newsletter Become a Member Log in Turn a static SVG into an interactive one , with Flourish August 30, 2023 Topic Apps Flourish illustration SVG It’s straightforward to share a static SVG online , but maybe you want tooltips or for elements to highlight when you hover over them . Flourish has a new template to provide the interactions easier Seems . promising Related Interactive visualization is still alive Flourish Review : Flexible Online Visualization with Templates and No Coding Datawrapper updates pricing structure , do more for free Become a . member Support an independent site . Make great charts . See what you get Projects by FlowingData See All Redefining Old Age What is old When it comes to subjects like health care and retirement , we

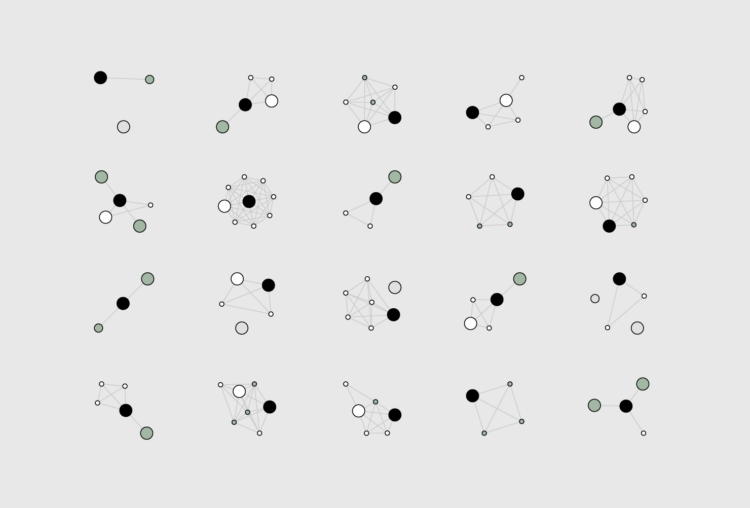

, Membership Courses Tutorials Projects Newsletter Become a Member Log in Turn a static SVG into an interactive one , with Flourish August 30, 2023 Topic Apps Flourish illustration SVG It’s straightforward to share a static SVG online , but maybe you want tooltips or for elements to highlight when you hover over them . Flourish has a new template to provide the interactions easier Seems . promising Related Interactive visualization is still alive Flourish Review : Flexible Online Visualization with Templates and No Coding Datawrapper updates pricing structure , do more for free Become a . member Support an independent site . Make great charts . See what you get Projects by FlowingData See All Redefining Old Age What is old When it comes to subjects like health care and retirement , we : Membership Courses Tutorials Projects Newsletter Become a Member Log in Members Only How I Made That Illustrator Python R How I Made That : Network Diagrams of All the Household Types By Nathan Yau Process the data into a usable format , which makes the visualization part more . straightforward Demo The How I Made That series describes the process behind a graphic and includes code and data to work with . With visualization , there’s a lot of filtering and aggregation so that it’s easier to see general patterns . But lately I’ve been more curious about what we can see from visualizing everything . So I made network diagrams for 4,708 household types in the United States Here’s how I made them using Python and . R To access this full tutorial , you must be a . member If you are already a

: Membership Courses Tutorials Projects Newsletter Become a Member Log in Members Only How I Made That Illustrator Python R How I Made That : Network Diagrams of All the Household Types By Nathan Yau Process the data into a usable format , which makes the visualization part more . straightforward Demo The How I Made That series describes the process behind a graphic and includes code and data to work with . With visualization , there’s a lot of filtering and aggregation so that it’s easier to see general patterns . But lately I’ve been more curious about what we can see from visualizing everything . So I made network diagrams for 4,708 household types in the United States Here’s how I made them using Python and . R To access this full tutorial , you must be a . member If you are already a Membership Courses Tutorials Projects Newsletter Become a Member Log in Members Only Visualization Integration August 24, 2023 Topic The Process tools Welcome to The Process where we look closer at how the charts get made . This is issue 253. I’m Nathan Yau . Visualization tools have gotten better over the years , and the major ones improved their range so you can do more with a single tool . But , if you want to maximize fun , a mixed toolbox is still . best To access this issue of The Process , you must be a . member If you are already a member , log in here See What You Get The Process is a weekly newsletter on how visualization tools , rules , and guidelines work in practice . I publish every Thursday . Get it in your inbox or read it on FlowingData . You also gain unlimited access to

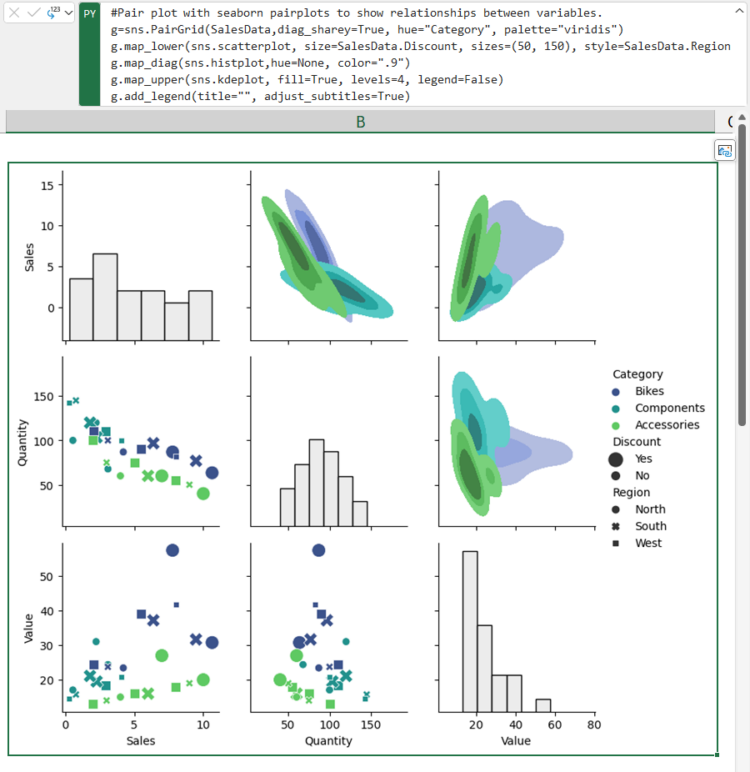

Membership Courses Tutorials Projects Newsletter Become a Member Log in Members Only Visualization Integration August 24, 2023 Topic The Process tools Welcome to The Process where we look closer at how the charts get made . This is issue 253. I’m Nathan Yau . Visualization tools have gotten better over the years , and the major ones improved their range so you can do more with a single tool . But , if you want to maximize fun , a mixed toolbox is still . best To access this issue of The Process , you must be a . member If you are already a member , log in here See What You Get The Process is a weekly newsletter on how visualization tools , rules , and guidelines work in practice . I publish every Thursday . Get it in your inbox or read it on FlowingData . You also gain unlimited access to Membership Courses Tutorials Projects Newsletter Become a Member Log in Python is coming to Excel August 23, 2023 Topic Software Excel Python Excel is getting a bump in capabilities with Python integration . From Microsoft Excel users now have access to powerful analytics via Python for visualizations , cleaning data , machine learning , predictive analytics , and more . Users can now create end to end solutions that seamlessly combine Excel and Python â all within Excel . Using Excelâ s built-in connectors and Power Query , users can easily bring external data into Python in Excel workflows . Python in Excel is compatible with the tools users already know and love , such as formulas , PivotTables , and Excel . charts Sounds fun for both Excel users and Python . developers It’s headed

Membership Courses Tutorials Projects Newsletter Become a Member Log in Python is coming to Excel August 23, 2023 Topic Software Excel Python Excel is getting a bump in capabilities with Python integration . From Microsoft Excel users now have access to powerful analytics via Python for visualizations , cleaning data , machine learning , predictive analytics , and more . Users can now create end to end solutions that seamlessly combine Excel and Python â all within Excel . Using Excelâ s built-in connectors and Power Query , users can easily bring external data into Python in Excel workflows . Python in Excel is compatible with the tools users already know and love , such as formulas , PivotTables , and Excel . charts Sounds fun for both Excel users and Python . developers It’s headed